Back in late 2019 I made the decision to transition away from being a Tech Field Day event lead and Analyst for Gestalt IT and get back to my roots as an engineer. Thankfully Stephen Foskett, my employer and the creator of Tech Field Day, was understanding and we parted on good terms. We even discussed my eventual return to Field Day as a delegate after I had settled in at the new gig. Well I’m thrilled that the time has finally come as Stephen has invited me to Cloud Field Day 10, which is taking place March 10-12, 2021.

The event looks to have an excellent line up of sponsors which can be found on the event page.

As far as I can tell, all of the companies presenting are Field Day veterans, but I haven’t personally heard from all of them. Sometimes the Field Day crew are able to get information about the presentations ahead of time from the sponsors to help delegates prep.

Some presenters have shared info thus far while others have not. In the case where the presentation topic is unknown I am just going to review the lineup and take a wild guess about what kind of presentation to expect.

Veeam

Having presented at 13 previous Field Day events, I would expect the Product Strategy Team, led by former Field Day Delegate Rick Vanover to nail this event. I imagine we will be hearing a lot about the new features of their newly released version 11 of their product. As a former Veeam Vanguard and long time fan of the company, I have high hopes for this one.

Komprise

Komprise has previously presented at Storage Field Day a couple of times previously, but not Cloud Field Data. They bill themselves as a data management company. There is no doubt that the modern enterprise is drowning in data whether their applications live in the cloud or not. I’m interested to hear what they have to say about their capabilities specific to the enterprise cloud.

Intel

Intel is no stranger to Tech Field Day, or the tech industry in general. They are tech titan in fact. Surprising, with all of Intel’s experience with Field Day events, this will be their first appearance at a Cloud Field Day event. Having said that, being involved in the Cloud Discussion is not new to Intel as they took part in a Cloud Influencer Roundtable at VMworld 2019.

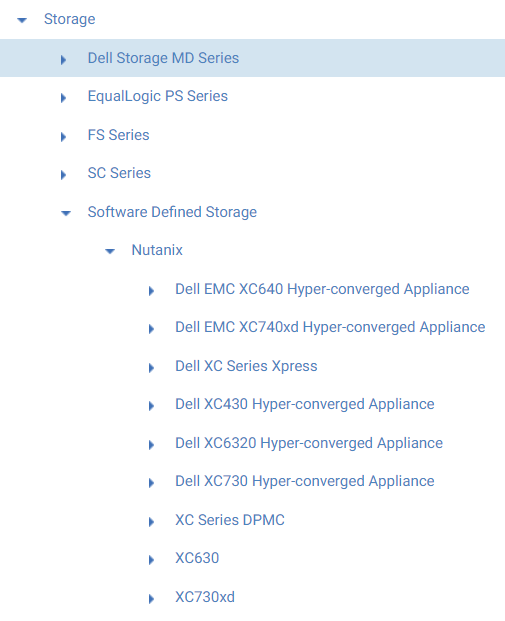

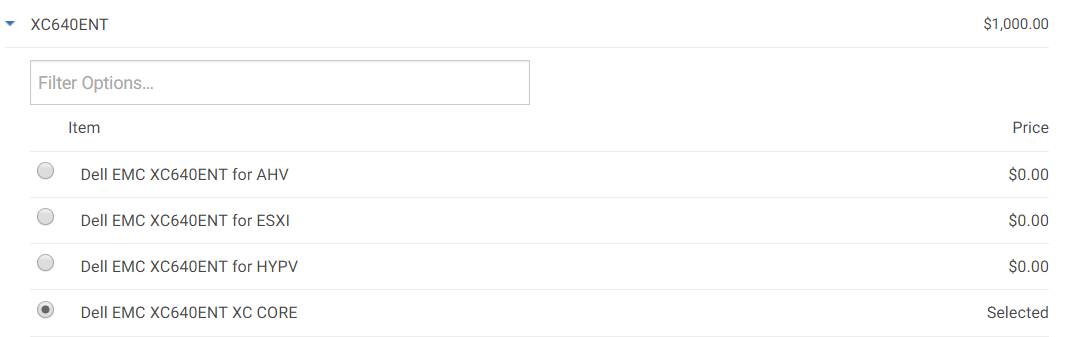

Dell Technologies

The Dell Technologies Cloud strategy is one that we have seen evolving over the years. I would expect to see a more robust offering around creating a private and hybrid cloud centered around their VxRail platform on-premises and offerings like VMware Cloud on AWS. However, last time Dell presented at a Cloud Field Day event, they basically co-presented with VMware. However this time, though both companies will be present at the event, they will be presenting on different days. Does this mean we will hear about more than their optimized solutions around VMware technologies? I look forward to finding out!

VMware

No stranger to Cloud Field Day, or Tech Field Day in general It appears as though VMware will be providing an update on VMware Cloud on AWS at Cloud Field Day 10. Multiple VMware on “X” cloud solutions exist now, but VMC on AWS was the first. VMware’s main differentiation for their offering up to this point has been that their offering was both developed by VMware engineers and is supported by VMware. VMware recently published a blog post in preparation for CFD10 which can be found here. Looks like the topic of discussion will be around VMC as a platform for modern applications (read K8S based on Tanzu). I’m looking forward to this one and the interactions to come.

NetApp

NetApp is another prolific Tech Field Day sponsor and well known in the industry. Known as a storage company, NetApp has surprised a few folks with past presentations that have more to do with modern applications and cloud than you would expect. That looks to be the case this time around as well. I have seen a preview of NetApp’s agenda and it looks to be focused on Kubernetes and NetApp’s recent acquisition of Astra, a data management platform for Kubernetes workloads.

Scality

Another Field Day vet, Scaility is known for the scale-out storage platform that provides both object storage and file services. Having seen a preview of Scality’s agenda, I can say that this is another session that I am really looking forward to. The topics look to be centered around data sovereignty and regulation.

Why would I be looking forward to this conversation you may ask? As technologists, we often get excited and geek out about techs and specs while ignoring or disregard the non-technical realities that affect our industry. While I’m sure Scality will find opportunities to turn these issues back to their product and how it helps solve these issues for enterprise IT shops, it is this kind of mindfulness that is missing from many IT vendors and practitioners alike. Being able to map actual business concerns back to a solution is how IT can really show it’s value.

StorageOS

I’ll admit that I’m not very familiar with StorageOS, but I am looking forward to hearing from them. A quick look at their website gave me a pretty good idea of what their pitch is. Primarily a persistent storage platform for stateful applications, StorageOS is leaning into K8S as much as anyone else in the industry. It appears that this will be the company’s first appearance at Cloud Field Day, and their first event sine their debut at Tech Field Day 12 over 4 years ago! I’m sure a lot has change since then, so I’m going to try to go back and watch their previous appearance ahead of the CFD10 presentation for comparison’s sake.

Oracle

Oracle has been at previous Field Day events, but this is their first time presenting since they released their VMware Cloud solution. I don’t know for a fact that this is what they will be presenting on, but I’m willing to bet that they will. I’ve noticed a focus in marketing and promotion of this solution recently throughout the tech community and expect them to do the same at Field Day. As there are similar solutions on pretty much every hyperscaler at this point, I’m looking forward to hearing how Oracle’s offering is uniquely differentiated.

Wow, that’s a lot of presentations! I’m really looking forward to this event, and hope you can join in! Be sure to tune into the presentations next week from March 10-12. All presentation times can be found on the the Cloud Field Day 10 event page. Be sure to tune in and interact with the delegates and presenters on Twitter using the hashtag #CFD10. See you then!